3a. Research#

We discussed how to scope and prioritize what should be researched in chapter 5, as an outcome of the RCDS process. Now, we will focus on how to conduct research as the first phase of the DCDL. Detection engineering is all about understanding and eliminating or reducing threats. Research is a comprehensive and sometimes complex process in itself. It serves as both a means and an end, supporting the creation of other artifacts and content, but also as an information channel. It is both directly and indirectly valuable, providing both specific details as well as a more generalized understanding of malicious tendencies, capabilities, and tradecraft.

Threat research is often described in some of the following non-mutually-exclusive categories:

First party

Conducting brand new or partially new research

New and novel approaches to existing research, vulnerabilities, or threats

Existing research applied to new technologies

Second party

Conducting first party research directly for someone else

Curated threat intel feed

Third party

Applied derivative research based on first party research and observations

Processing and translating a threat report for the creation of security rules or signatures

Consumption of a threat feed

Commodity

A focus on commodity malware, common tradecraft, or “low hanging fruit”

Novelty

An emphasis and focus on new techniques or unexplored threat surfaces

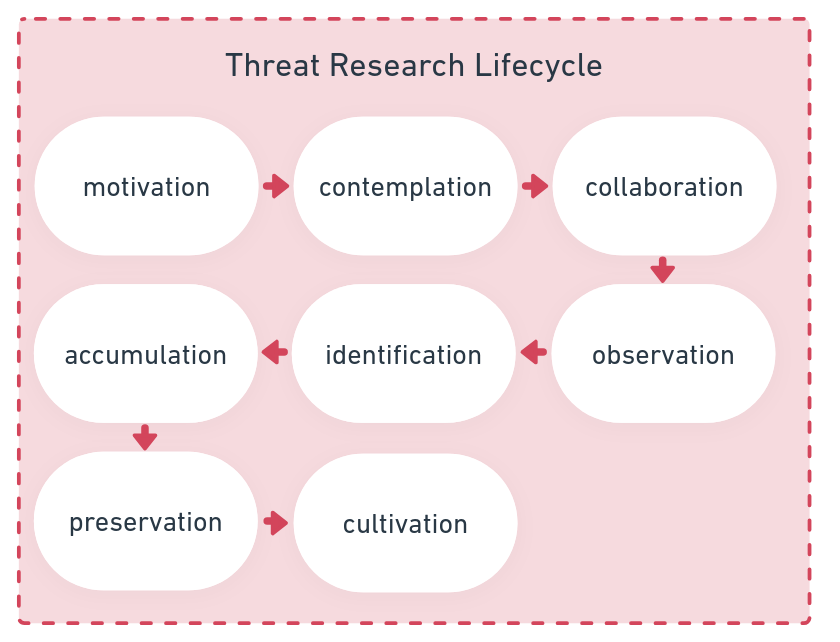

Fig. 28 TRL#

The purpose of the TRL is to create a repeatable and sustainable approach to conducting collaborative research and preserving it in an easily retrievable and understandable manner. Much of these first three steps including motivation, contemplation, and collaboration will likely have been explored during the process of deciding whether to prioritize conducting to any work, as part of the RCDS, where an understanding of the risk, complexity, and prevalence were reviewed, among other factors.

3ai1. Motivation#

Consider whether the outcomes justify the costs for conducting specific research.

Considerations:

How prevalent is the threat or technology being abused?

How severe is the impact of success?

How complex is the threat to exploit?

Does the subject matter overlap with the interests and expertise of the staff

What is the greatest expected value of the resulting research

Is there any existing overlapping research?

3ai2. Contemplation#

Consider what is expected to be produced and how it relates to the outcomes of the previous step. There is some overlap between the considerations over the first three steps, but reassessing them within this context provides more obvious insights.

Considerations:

Deciding which general buckets will or may apply (complexity, novelty, depth and breadth)

What is known about the technology?

What is known about existing threats and vulnerabilities?

Are the applicable expertise, knowledge, and skills available?

How much of an investment will it be to account for knowledge and skill gaps?

What is the likelihood of this being vulnerable and exploited actively?

What is the prevalence and impact of this if compromised?

3ai3. Collaboration#

This step represents the formal kickoff of conducting the research. As a last preemptive step, you must decide if this is an individual research project or meant to be a collaborative effort. Once the research begins, a shared understanding of expectations, timing, and deliverables will make it a smoother process.

Considerations:

Would this research be more effective with more than one person?

Do multiple people disparately possess the cumulative required skills and knowledge?

Is there anyone available with the bandwidth to contribute?

How will findings be shared?

How will it be decided what to pivot and focus on based on intermediate findings?

Is it parallel research or guided and if so, who should lead it?

3ai4. Observation#

This step will occur throughout the duration of the research, and is focused on observing the results of different inputs and manipulations to the target technology. At this phase, it is mostly about passively consuming the results.

Considerations:

What are the outcomes of the research and manipulations?

Are things working as expected?

Are those not working as expected exploitable?

Do failures have a failsafe or do they fail-unsafe?

What evidence is created?

Logging, errors, etc

Are there repeatable patterns or outliers?

Are there any time sensitive reactions?

3ai5. Identification#

Taking the results from observation and processing them to see if anything significant or applicable stands out that can be leveraged for detection engineering efforts.

Considerations:

Opportunities for exploitation, detection, or prevention

Opportunities for other defensive capabilities

Opportunities to triage and analyze

Opportunities to enhance logging or event captures

Corroborate with existing internal and external research

3ai6. Accumulation#

This step is meant to force intentionality around best practices for retrieving and storing the observations and evidence with minimal unintentional manipulation from the research process. Systems and malware can be very sensitive to certain activity, such as incorporating anti-debugging techniques, and so this step may require repeating this process over multiple unique approaches.

Considerations:

What is the best way to retrieve evidence?

What is the best way to store it?

What is the best way to categorize and organize it?

What susceptibility exists to unintentional manipulation?

What should be preserved about the process of capturing it (such as screenshots)?

Can specific tooling or automation simplify this?

3ai7. Preservation#

This is concerned with the long term storage of observables, evidence, and artifacts, as well as any specific tooling that was created along the way. Remember, it is all about simplification for future reference and recall. Additionally, context should be preserved to give insights into the artifacts themselves, so internal notes and reporting are imperative.

Considerations:

What is the best way to organize outcomes of all of the previous steps?

What is the best way to store it?

What is the best way to reference it?

What is the best way to maintain it?

What safety precautions to prevent unintentional future infection?

What is the best way to store context of findings?

How should it be categorized and tagged for future awareness and similarity?

3ai8. Cultivation#

As stated previously, the primary point is to make this a repeatable process to the point that it no longer feels like following a playbook or lifecycle, but rather inherently incorporated into regular operations. It should feel like a continuous process of improvement, where every new cycle builds upon the process in some way.

Considerations:

What is the best way to translate all of the derived value in this process into consumable knowledge

What TTPs and tradecraft were developed as part of the process and how should they be nurtured

What can be improved with the process, expertise, or access to resources

How can we reinforce and reward positive outcomes and improve on those lacking

How can we reinforce other portions of the full detection engineering lifecycle based on these outcomes

While conducting research, the environmental architecture, data, and baselines (DPBL) should be consulted often in order to both scope the focus, as well as to validate any assumptions made during those phases. Next, we will continue down the DCDL framework and identify how we can translate the research into tangible outcomes or capabilities.